Merge Sort, Divide & Conquer, Master Theorem

Class Material

- Slides can be found here.

Notations: Big-O vs. Little-o

In computational complexity theory, Big-O (denoted as ) and Little-o (denoted as ) are both used to describe the growth rates of functions, but they differ subtly in how tightly they bound the growth of one function relative to another. Below is a detailed comparison between the two:

Big-O Notation (O)

-

Definition: means that f(n) does not grow faster than g(n) up to a constant factor for sufficiently large n.

- There exist positive constants and such that:

-

Intuition:

Big-O provides an upper bound on the growth rate of . It tells us that grows at most as fast as asymptotically. It allows equality — meaning and could potentially grow at the same rate. -

Example:

because, asymptotically, is the dominant term (the other terms grow more slowly). So does not grow faster than .

Little-o Notation (o)

-

Definition: means that f(n) grows strictly slower than g(n), even without any constant factor.

- Formally, for every positive constant , there exists such that:

- This implies that the ratio as .

-

Intuition:

Little-o provides a strict upper bound on the growth rate. It says that grows strictly slower than as becomes large, and they cannot grow at the same rate. -

Example:

because grows slower than as . Specifically, the ratio .because grows at the same rate as (not strictly slower). The ratio as . (We don't need to use L'Hôpital's Rule because it is not an indeterminate form – both the numerator and denominator grow at the same rate. Since they are identical, their ratio is always 1, no matter how large becomes.)

The expression (infinity over infinity) is considered an indeterminate form in calculus. This means it is not immediately clear what the value should be, and further analysis is required to evaluate it properly.

Why is Indeterminate?

- Infinity () is a concept, not a real number. It indicates that a value grows without bound.

- When we encounter , both the numerator and the denominator are growing indefinitely, but we don’t know how fast they grow relative to each other. Depending on the functions involved, the ratio could result in different outcomes:

- Finite value: as .

- Infinity: as .

- Zero: as .

Resolving using L'Hôpital's Rule

When a limit yields the form , we often apply L'Hôpital's Rule to evaluate it. L'Hôpital’s Rule states:

provided that both and , and the derivatives and exist.

Example Using L'Hôpital's Rule

Evaluate:

Both the numerator and the denominator go to infinity, giving . Applying L'Hôpital's Rule:

Summary

is indeterminate because it can lead to different results depending on the growth rates of the functions involved. To resolve it, we need more information, often using tools like L'Hôpital's Rule or algebraic manipulation.

Key Differences between Big-O and Little-o

| Aspect | Big-O (O) | Little-o (o) |

|---|---|---|

| Bound Type | Upper bound (not strict) | Strict upper bound |

| Equality Allowed? | Yes (same growth rate possible) | No (strictly slower growth) |

| Mathematical Limit | ||

| Example Relationship |

Summary

- Big-O notation allows for equal growth rates (or can grow slower or at the same rate as ).

- Little-o notation requires that grows strictly slower than .

Both notations are useful tools in asymptotic analysis to describe how algorithms perform as input sizes grow large.

In asymptotic analysis, is the threshold beyond which the behavior of the function we’re analyzing matches the specified growth bound. Essentially, it marks the point where the asymptotic relationship becomes valid.

- When we say that , it means that after a certain input size , the inequality holds:

- For little-o notation , the idea is that for all positive constants , there exists an beyond which:

Intuition

- ensures that the asymptotic behavior is true only for large enough input sizes. For smaller values , the functions may behave unpredictably, but asymptotic notations focus on large .

Example

Say we claim that .

- For small , the term might dominate. However, for large enough , behaves similarly to .

- In this case, would be the point where for some constant . For example, with , we can say that the inequality holds for .

Summary

- is the starting point beyond which the asymptotic bound (like or ) reliably holds.

- Asymptotic notations don't care about small inputs—only what happens as matters. ensures that we focus on that asymptotic behavior.

Sorting Algorithms

Merge Sort

Resources

Lemma: No sorting algorithm can sort arbitrary numbers in comparisons

- This means that Merge Sort is optimal in terms of the number of comparisons it makes.

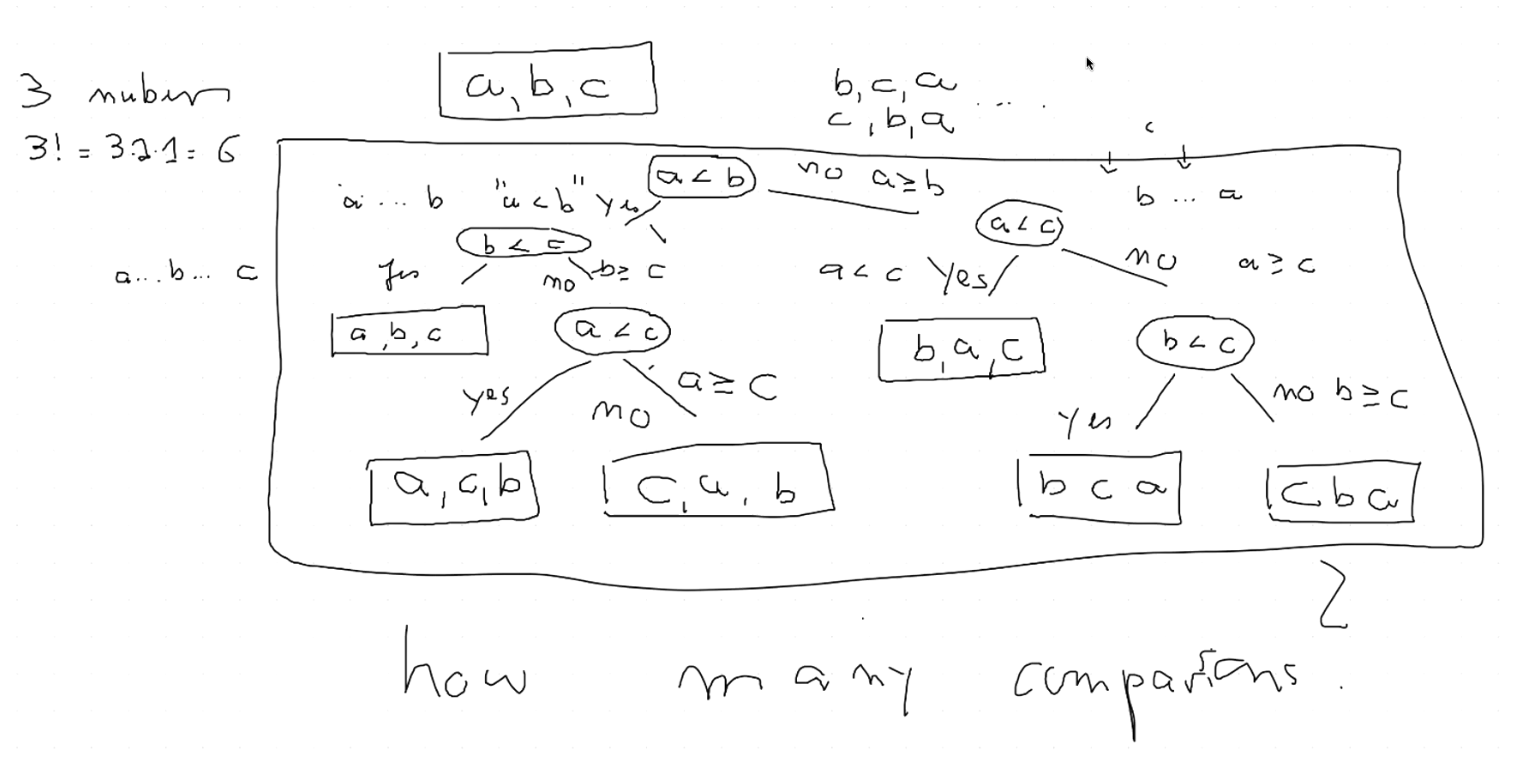

Comparison Trees

In the slides: Using Comparison Trees to describe any comparison-based algorithm.

-

The height of the tree represents the number of comparisons made by the algorithm: .

To arrive at a specific leaf, which is the correct result – a sorted permutation, we've only had to traverse at worst the max height of the tree.

-

The number of leaves represents the number of possible outcomes: . (For example: has possible permutations)

- These assume that the tree is a binary tree.

- Comparisons have exactly 2 results: "" or "".

Showing that Comparison Trees are

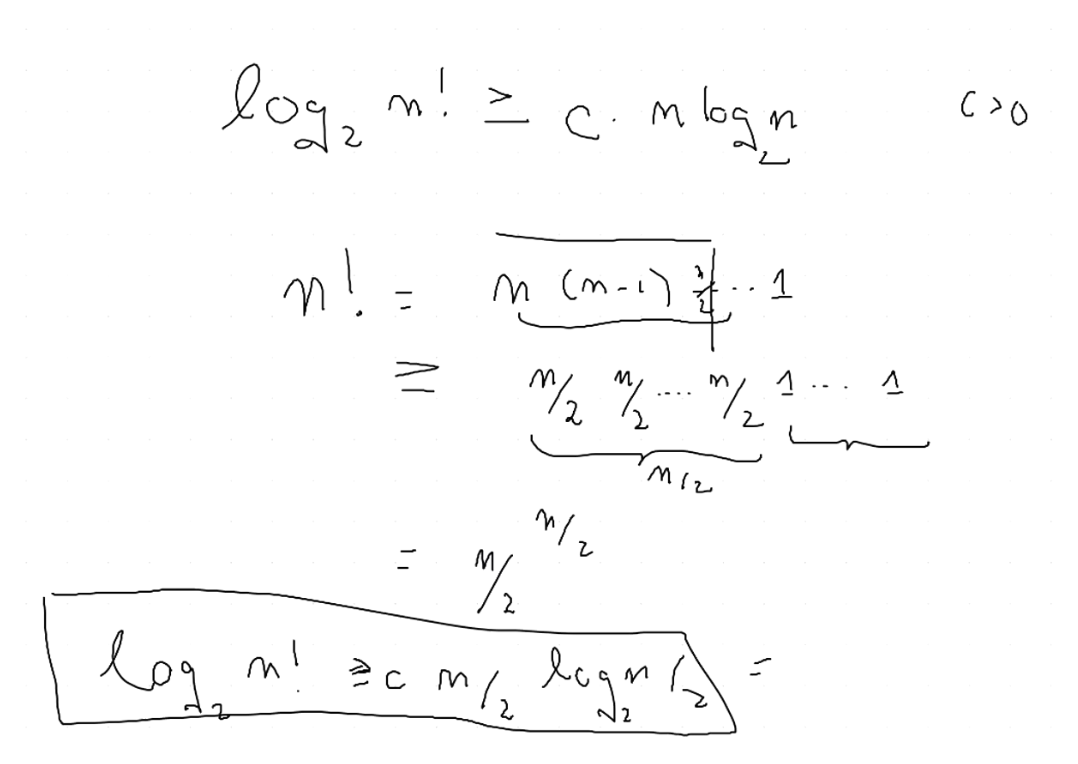

To show that is without using Stirling's approximation, we need to lower bound and compare it asymptotically with .

Step 1: Express

We start with the definition of the factorial's logarithm:

Using the logarithm of a product:

Step 2: Lower Bound

Notice that the sum can be lower-bounded by focusing on the second half of the terms (those from to ):

Tip: We can do this magic step of focusing on the second half because of the "" sign. We're looking for a lower bound, so we can ignore the first half of the terms. For graphical intution, the prof's half-baked example here might help, though it is not entirely complete.

Since for all in this range, , we can lower bound each by :

There are terms in this sum, so:

Step 3: Simplify the Lower Bound

We simplify the expression . For large , the term is insignificant, so:

Using , we get:

For large , the becomes negligible, so:

Step 4: Compare with

Now we want to compare with . If we set , the lower bound becomes:

This shows that grows asymptotically at least as fast as , which is clearly . Therefore:

Conclusion

We have shown that is without using Stirling’s approximation by directly analyzing a lower bound of the sum .

Master Theorem

Resources

- If you're having a hard time understanding the proof for Master Theorem, watching the Merge Sort video first will 100% help.